Docker Apps and Images for everyone

Wait, What is Docker?

Have you ever worried about software running in your machine but not in other systems? Docker is the solution. Docker sandboxes applications running within as containers so that their execution is completely isolated from others. This has become enormously popular over the last few years, but to capitalize on it, you need to integrate third-party images.

What is a Container Image?

A Docker container image is a standalone bundle of executable packages and software to run an application. An image is a dormant and immutable file with a set of layers that essentially acts as a snapshot of a container. Docker’s public registry is usually your central source for container apps.

What are Containers?

A container is an efficient little environment that encapsulates up code and all its dependencies so the application runs quickly and reliably from one computing environment to another. In simple words, an instance of an image is called a container. Container images become containers only from their runtime on Docker Engine.

Docker Management

1. Portainer

Portainer is a management UI that enables you to manage your different Docker environments (Docker hosts or Swarm clusters). Portainer is easy to deploy and has a surprisingly intuitive user interface for how functional it is. It has one container which will run on any Docker engine (whether it’s deployed as a Linux container or a Windows native container). Portainer allows you to control all of your Docker resources (containers, images, volumes, networks, etc.) it is compatible with both the standalone Docker engine and with Docker Swarm mode.

Installing Portainer

# create a Docker volume portainer_data

docker volume create portainer_data

# create a Portainer Docker container

docker run --restart=always --name "portainer" -d -p 9000:9000 \

-v /var/run/docker.sock:/var/run/docker.sock \

-v portainer_data:/data portainer/portainer

Advertisement and Internet Tracker Blocking Application

2. PiHole

Pi-hole is a must-have for everyone whether you’re a hardcore gamer, developer, or just couch potato. It is a Linux network-level advertisement and tracker blocker that acts as a DNS sinkhole (supplies systems looking for DNS information with false returns). It is designed for single-board computers with network capability, such as the Raspberry Pi. Even If your router does not support changing the DNS server, you can use Pi-hole’s built-in DHCP server. The optional web interface dashboard allows you to view stats, change settings, and configure your Pi-hole.

Unlike traditional advertisement blockers where only a user’s browser ads are removed, this functions similarly to a network firewall. This means that advertisements and tracking domains are blocked for all devices behind it. Since it acts as a network-wide DNS resolver, it can even block advertisements in unconventional places, such as smart TVs and mobile advertisements.

Unlike traditional advertisement blockers where only a user’s browser ads are removed, this functions similarly to a network firewall. This means that advertisements and tracking domains are blocked for all devices behind it. Since it acts as a network-wide DNS resolver, it can even block advertisements in unconventional places, such as smart TVs and mobile advertisements.

Installation

docker run -d \

--name pihole -p 53:53/tcp -p 53:53/udp \

-p 80:80 -p 443:443 -e TZ="America/Chicago" \

-v "$(pwd)/etc-pihole/:/etc/pihole/" \

-v "$(pwd)/etc-dnsmasq.d/:/etc/dnsmasq.d/" \

--dns=127.0.0.1 --dns=1.1.1.1 \

--restart=unless-stopped --hostname pi.hole \

-e VIRTUAL_HOST="pi.hole" -e PROXY_LOCATION="pi.hole" \

-e ServerIP="127.0.0.1" pihole/pihole:latest

Why do I love it?

- Every day browsing is accelerated by caching DNS queries.

- Everyone and everything in your network are protected against advertisements and trackers.

- It comes with a beautiful responsive Web Interface dashboard to view and manage your Pi-hole.

Execution Environments

3. TensorFlow with Jupyter Images

The Tensorflow Docker image is based on Ubuntu and comes preinstalled with python3, python-pip, a Jupyter Notebook Server, and other standard python packages. Within the container, you can start a python terminal session or use Jupyter Notebooks and use packages like PyTorch, TensorFlow.

# For other variants, visit the link above

# Use the latest TensorFlow with Jupyter CPU-only image

docker run --name jupyter-cpu -h "jupyter-server" \

-p 8888:8888 tensorflow/tensorflow:nightly-py3-jupyter

# Use the latest TensorFlow with Jupyter GPU image

docker run --gpus all --name jupyter-cuda -h "jupyter-server" \

-p 8888:8888 tensorflow/tensorflow:latest-jupyter

For those who want to use a GPU, Docker is the easiest way to enable GPU support on Linux since only the NVIDIA GPU driver is required on the host machine. For CUDA support, make sure you have installed the NVIDIA driver and Docker 19.03+ for your Linux distribution. For more information on CUDA support on Docker, visit NVIDIA Container Toolkit

As you can see above, I’m using a 10.2 CUDA driver for my GeForce MX150 with a total GDDR5 memory of 2GB. Although it’s an entry-level graphics card, it’s at least 450% faster than my CPU (i5-8520U), which makes training your machine learning models much quicker.

As you can see above, I’m using a 10.2 CUDA driver for my GeForce MX150 with a total GDDR5 memory of 2GB. Although it’s an entry-level graphics card, it’s at least 450% faster than my CPU (i5-8520U), which makes training your machine learning models much quicker.

Why I prefer it over platforms like Google Colab?

- You have to install all python libraries which do not come built-in with Google Colab and must repeat this with every session.

- Google Drive is usually your primary Storage with 15 GB Free space. While you can use local, it’ll eat your bandwidth for bigger datasets.

- If you have access to a modest GPU, it’s just a matter of time to install a CUDA enabled driver and deploy your personal Jupyter server with GPU support. With proper port forwarding and Dynamic DNS, you can also share your GPU with your friends as I do.

- The free tier of Google Colab comes with much lesser runtime and memory than their pro version. Therefore, you could suffer from unexpected termination and even data loss.

What more I like about it?

- Based on

Ubuntuso you install any additional Linux packages that you need in ease usingaptpackage manager. - Out of the box support for CUDA enabled GPU.

4. CoCalc

In my opinion, CoCalc is definitely one of the underrated Jupyter notebook servers out there. Although it is intended for computational mathematics, it is essentially Jupyter notebooks on steroids. Your notebook adventure is enhanced with real-time synchronization for collaboration and a history recorder. Additionally, there is also a full LaTeX editor, Terminal with support for Multiple Panes, and much more.

Running the container

# If you're using Linux containers

docker run --name=cocalc -d -v ~/cocalc:/projects -p 443:443 sagemathinc/cocalc

# If you're using Windows, make a docker volume and use that for storage

docker volume create cocalc-volume

docker run --name=cocalc -d -v cocalc-volume:/projects -p 443:443 sagemathinc/cocalc

Image Credits to CoCalc

What do I like about it?

- Multiple users can simultaneously edit the same file, thus enabling real-time collaboration. It also comes with side-by-side chat for each Jupyter notebook.

- Realtime CPU and memory monitoring.

- Comes with a LaTeX Editor and many pre-installed kernels (R, Sage, Python 3, etc).

What I dislike about it?

- Unlike the TensorFlow with Jupyter Image, this does not come with complete CUDA support out of the box.

- The full image size is a whopping 11GB+ and is a full Linux environment with nearly 9,500 packages, so you better free up your storage before pulling this image.

- (Opinionated) The interface is quite different from the traditional Jupyter notebook interface, adding a little bit of learning curve.

Base Images

Wait, what’s a base image?

A base image (not to be confused with parent image) is the parent-less image that your image is fundamentally based on. It is created using a Dockerfile with the FROM scratch directive. Docker image must include an entire root filesystem and the core OS installation, so choosing the right base image essentially determines factors such as the size of your container image, packages it inherits.

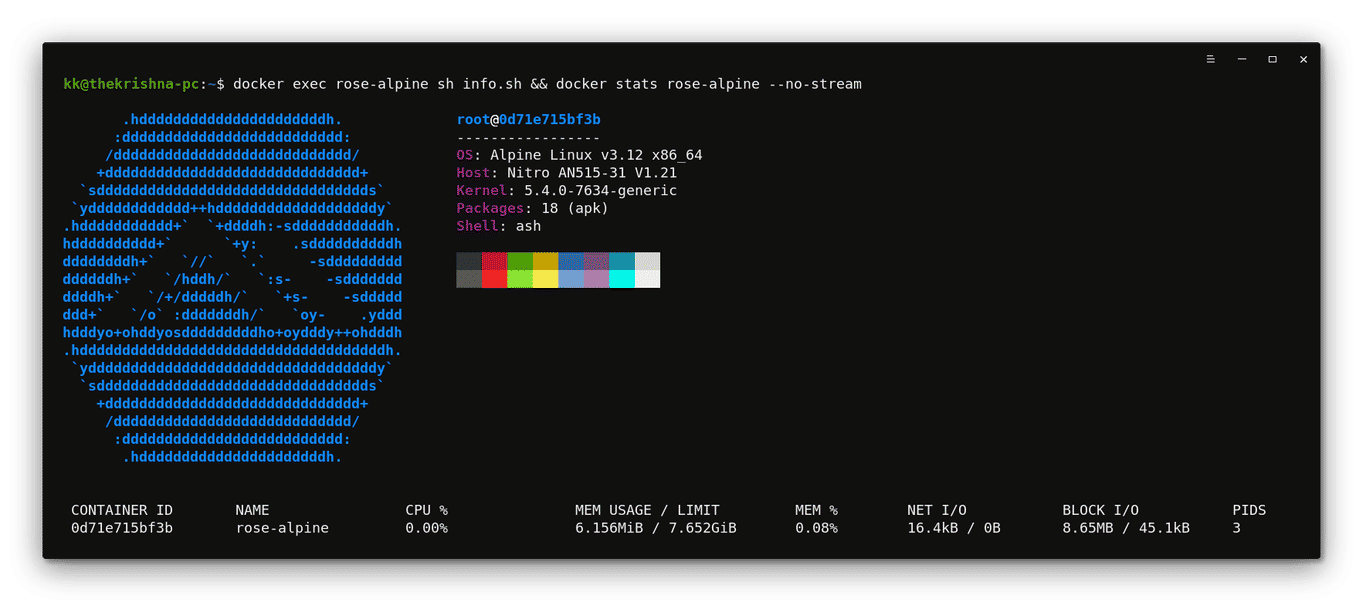

5. Alpine

If you’ve built Docker images, you know Alpine Linux. Alpine Linux is a Linux distro mostly built around musl libc (a standard library intended for OSes based on the Linux kernel) and BusyBox (provides several Unix utilities). The image is just around 5 MB in size and even has access to a package repository, which is much more complete than any other BusyBox based images while being super light. This is why I love Alpine Linux, and I use it for services and even production applications. As you can look below, the image comes with only 18 Packages and has a lower memory utilization than ubuntu. The image comes with a apk package manager.

Running the container

# For other tags, visit https://hub.docker.com/_/alpine/

# Copy-paste to pull this image

docker pull alpine:latest

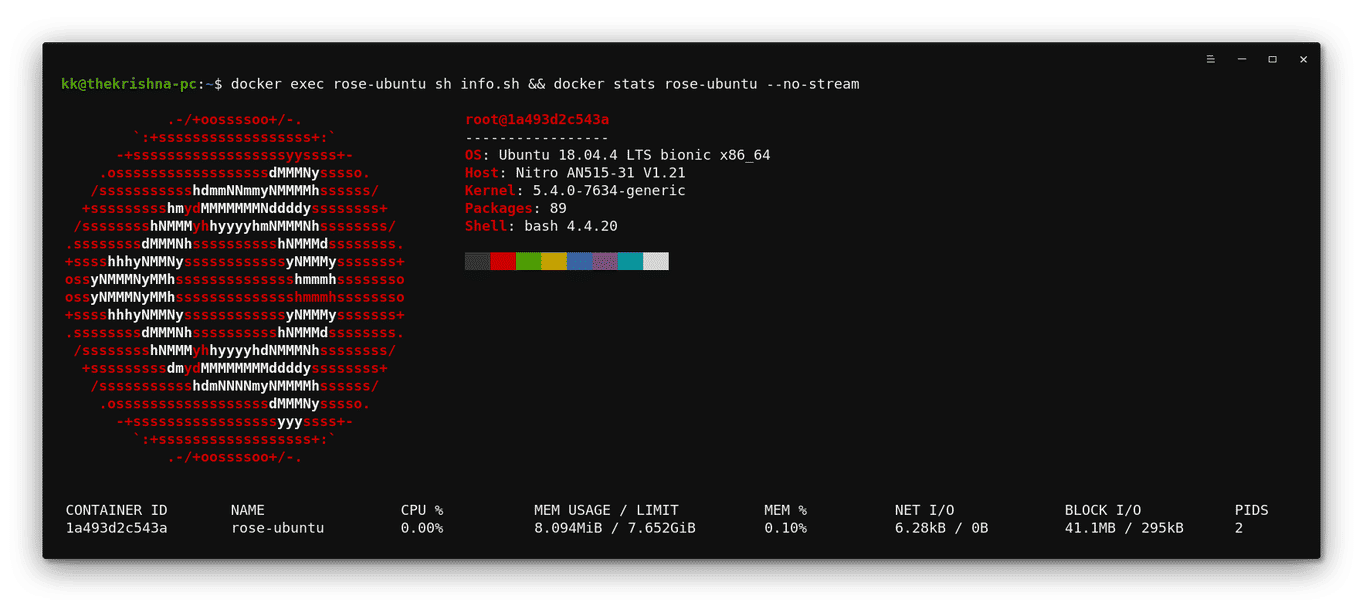

6. Ubuntu

Ubuntu is undoubtedly the most popular Linux distro and exceptionally known for its accessibility and compatibility. It is a Debian-based Linux distro that runs on practically everything (from the desktop to the cloud, to all your IoTs). The ubuntu tag points to the “latest LTS” image as that’s the version prescribed for general use. As you can see below, the image comes with only 89 Packages and has an apt package manager.

Running the container

# For other supported tags, visit https://hub.docker.com/_/ubuntu/

# Copy-paste to pull this image

docker pull ubuntu:latest

Database Systems

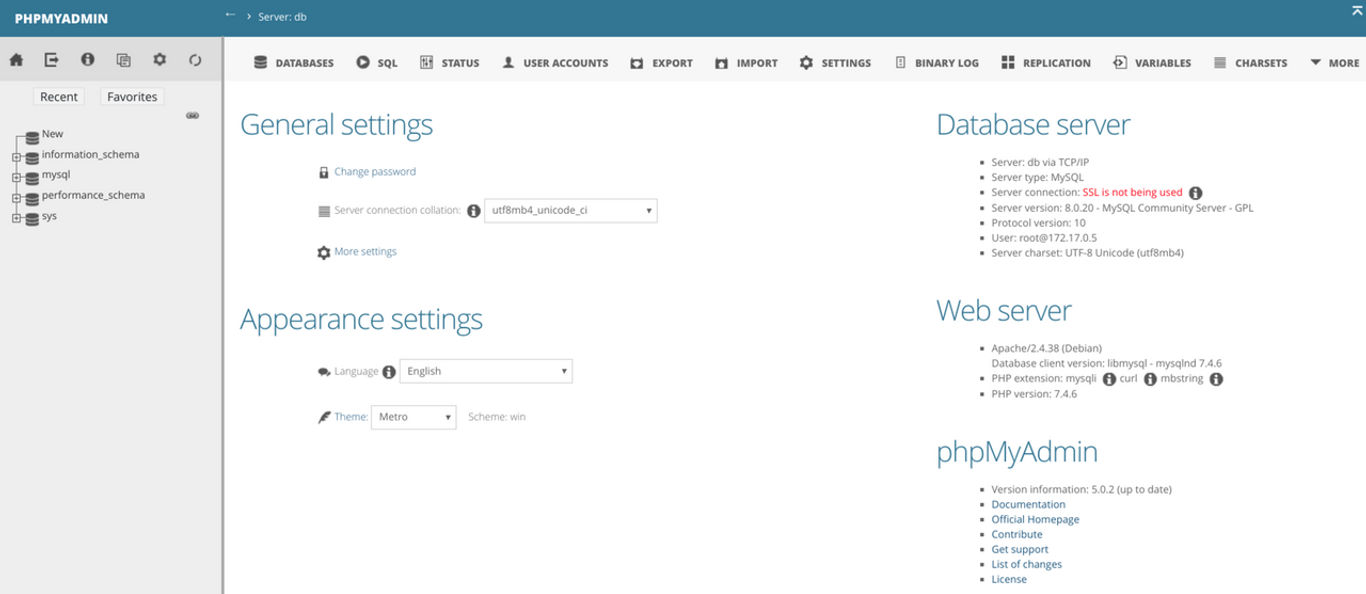

7. MySQL + PHPMyAdmin

MySQL is undoubtedly the most popular open-source database. Execute the below commands to deploy two containers for MySQL and PHPMyAdmin. They’ll leave the port 3306 in the host to accept SQL connections and port 8080 in the host for PHPMyAdmin. You can also optionally install SSL certificates to secure your connections when taking it public. MySQL is better suited for applications that use structured data and require multi-row transactions. As of June 2020, there are about 546,533 questions on just StackOverflow compared to it’s NoSQL on-par which has only 131,429 questions.

Running the container

# Runs MySQL server with port 3306 exposed and root password '0000'

docker run --name mysql -e MYSQL_ROOT_PASSWORD="0000" -p 3306:3306 -d mysql

# Runs phpmyadmin with port 80 exposed as 8080 in host and linked to the mysql container

docker run --name phpmyadmin -d --link mysql:db -p 8080:80 phpmyadmin/phpmyadmin

What do I like about it?

- Support for atomic transactions, privileged, and password security system.

- MySQL is around the block for a long time which results in a huge community support

What I dislike about it?

- It doesn’t have an official image (including open-source forks like MariaDB) for machines like Raspberry Pi and ODROID-XU4 running

arm32making developers usually falling back to either community or third party image builds. - It does not natively support load balancing MySQL clusters, although open-source software such as ProxySQL helps you achieve this but with a learning curve.

- Although the Vanilla MySQL is open source, it is not community-driven.

- Are prone to SQL injections to some degree.

8. MongoDB

MongoDB is an open-source document-oriented database that is on many modern-day web applications. MongoDB stores it’s data in JSON like files. I personally like MongoDB as its load balancing feature enables Horizontal scaling while explicitly removing the need of a Database Administrator. It is best suited for applications that require High write loads even if your data is unstructured and complex, or if you can’t pre-define your schema.

Running the container

# create a /mongodata directory on the host system

sudo mkdir -p /mongodata

# Start the Docker container with the default port number 27017 exposed

docker run -it -v /data/db:/mongodata -p 27017:27017 \

--name mongodb -d mongo

# Start Interactive Docker Terminal

docker exec -it mongodb bash

What do I like about it?

- Horizontal scaling is less expensive than vertical scaling which makes it financially a better choice.

- The recovery time from a primary failure is significantly low.

What I dislike about it?

- Lacks support for atomic transactions.

- MongoDB has a significantly higher storage size growth rate than MySQL

- MongoDB is comparatively young and hence cannot replace the legacy systems directly.

Database and Monitoring Tools

9. Grafana

Grafana is an open-source standalone tool mainly for visualizing and analyzing metrics while supporting integration with various databases (InfluxDB, AWS, MySQL, PostgreSQL, and more). Grafana supports built-in alerts to the end-users (even email you) from version 4.0. It also provides a platform to use multiple query editors based on the database and its query syntax. It is better suited for applications for identifying data patterns and monitoring real-time metrics.

Running the container

# Start the Docker container with the default port number 3000 exposed

docker run --name=grafana -d -p 3000:3000 grafana/grafana

Dashboard for monitoring resources on my Raspberry Pi

Web Server

10. Nginx

Nginx is more than just a lightweight web server as it comes with a reverse proxy, load balancer, mail proxy, and HTTP cache. I prefer Nginx to Apache as it uses lesser resources and quickly serves static content while being easy to scale. Compared to Apache, I’ve experienced Nginx to stutter less under load.

Nginx addresses the concurrency problems that nearly all web applications face at scale by using an asynchronous, non-blocking, event-driven connection handling algorithm. The software load balancing feature enables both Horizontal and Vertical Scaling, thus cutting down server load and request-server time. You can use the Nginx Docker containers to either host multiple websites or scale-out by load balancing using Docker swarm.

Running the container

# For other supported tags, visit https://hub.docker.com/_/nginx/

# Start the Docker container with the default port number 8080 exposed

docker run --name "nginx-server" -d -p 8080:80 nginx:latest